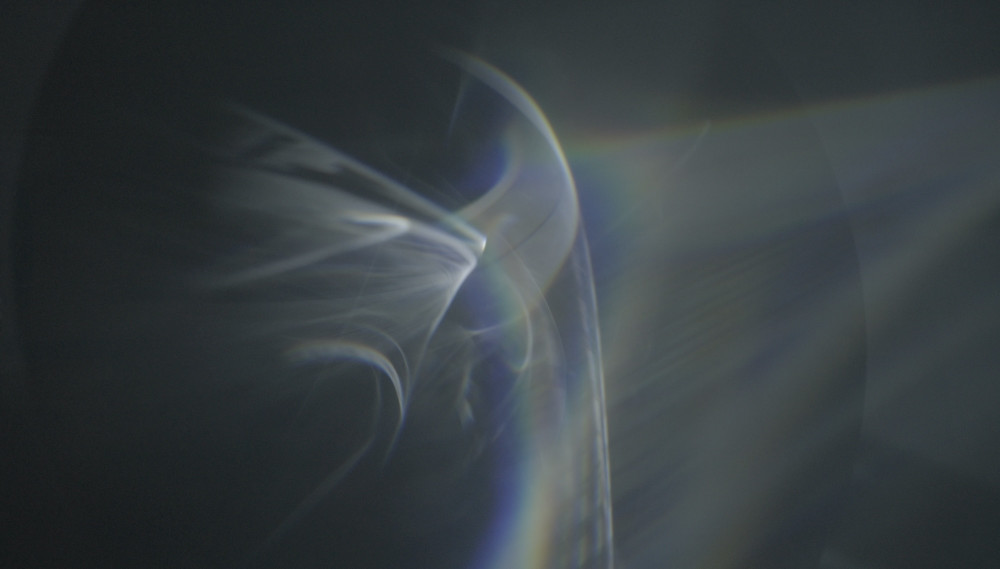

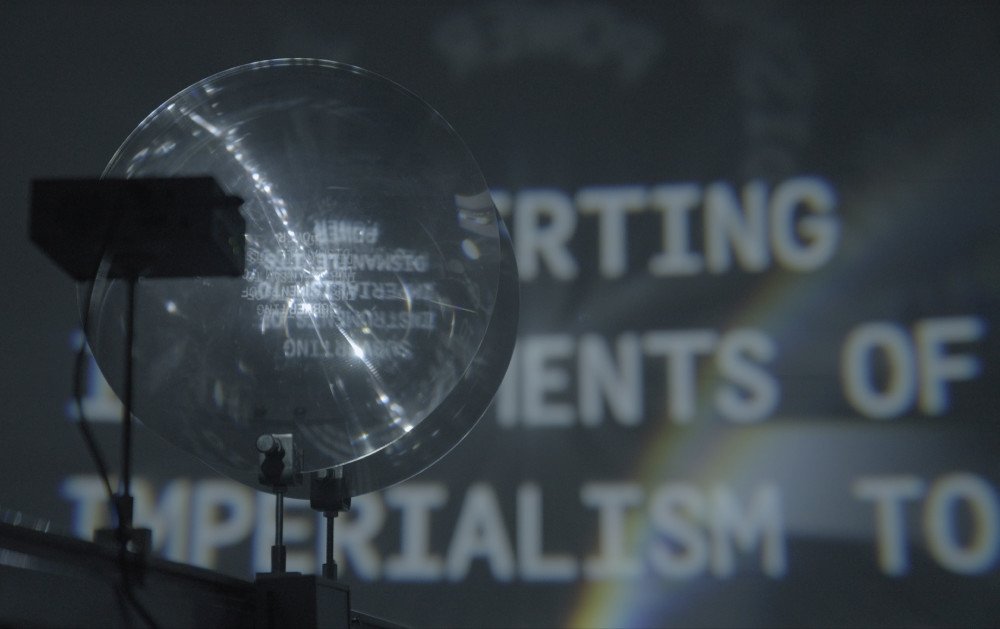

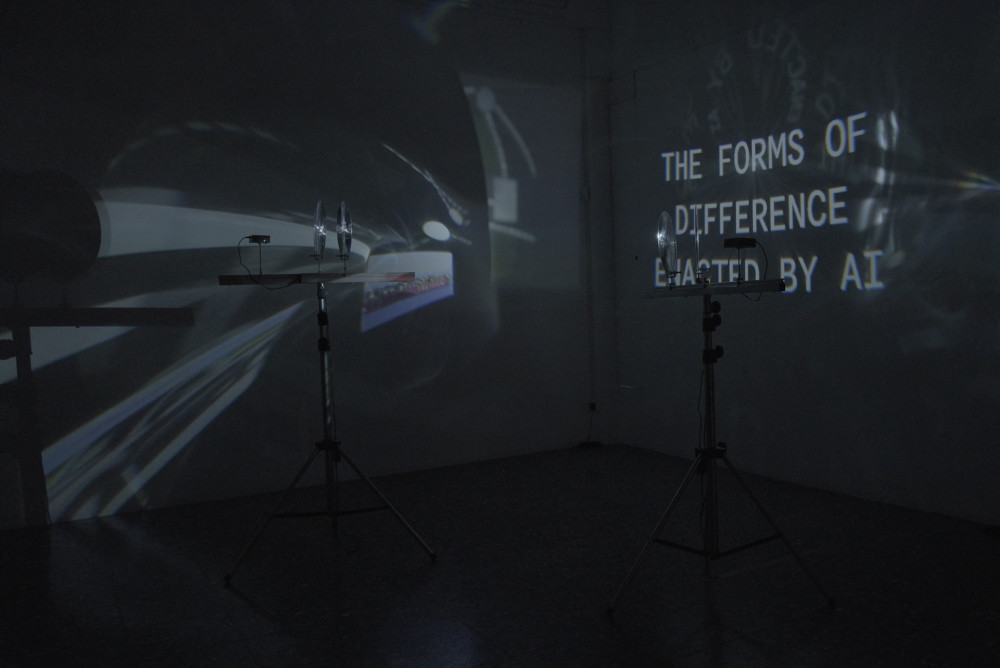

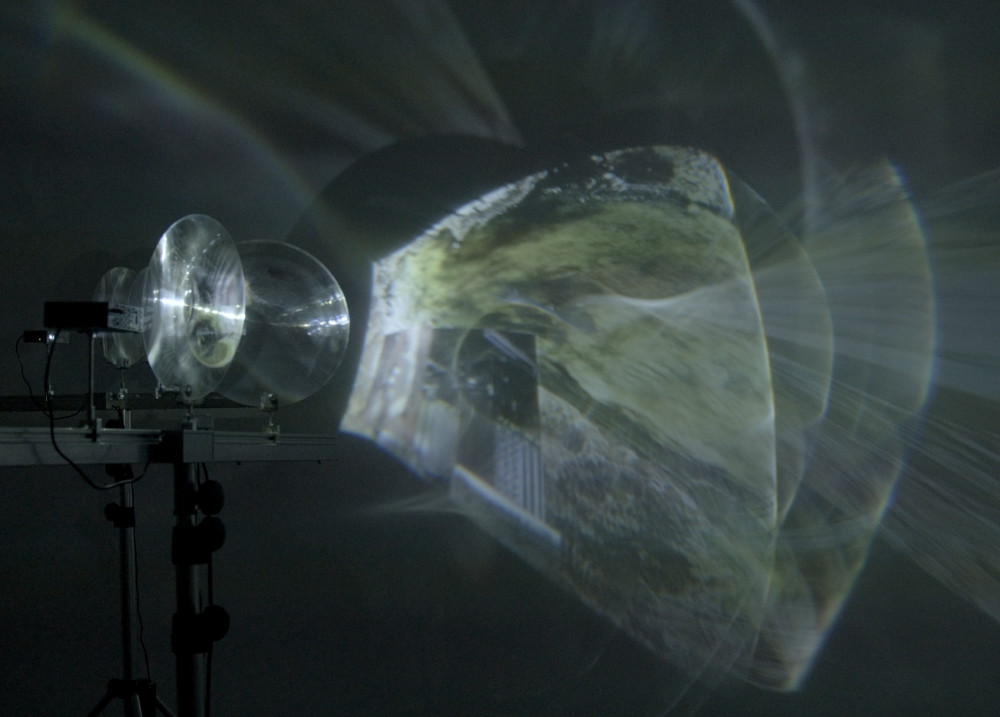

A Structural Plan for Imitation: Engines of Differentiation looks at how what is visualized through artificial intelligence is warped through machine interpretation. It questions the value systems embedded in artificial intelligence systems and how these lend themselves to the cultivation of difference. In the two-channel video installation, digital projections exploring the hyper-visuality of AI imagery are distorted through a series of fresnel lenses. Applying strategies of détournement to the images behind the images, and machine learning training datasets, the piece brings viewers into the complex relationships artificial intelligence creates between the visual and nonvisual, data and images.

Generative artificial intelligence confronts us with so much visual data that it’s beyond our capacity to look at or make sense of it all. Not only have the machines consumed much of the existing internet, but they also increasingly run on generated content, filled with error and bias. This topic entails highly problematic issues from amassing visual data and using it with or without permission, to labeling, analysis, and recirculation of visual data. Re-appropriating images from training datasets and considering them more closely seeks to explore these layers of interpretation and reinterpretation that defy straightforward solutions.

In this work, a flood of visual information is fed through apparatuses that enlarge and distort what viewers see within the surrounding space. At various times, one can make out glimpses of coherent images: faces from biometric imaging databases; ordinary objects and animals; and aerial surveillance footage — the likes of which are used to train many computer vision systems. These are combined with images situating artificial intelligence, historically and materially, drawing from the prehistory of artificial intelligence — when supercomputers were needed to process even simple inputs and long before the idea of computers creating realistic, photographic images became commonplace — as well as documenting its resource-intensiveness: devouring not only data but also energy and precious minerals.

The narration of the piece addresses the circularity of this topic: how foundational aspects of the technology may make it difficult or even impossible to subvert its divisive tendencies. In certain respects, generated visual media defies prior conceptualizations and calls for new perspectives to address its intricacies. As we struggle to come to terms with artificial intelligence’s affordances and perils, it is especially relevant to consider to what extent these methods and their outputs align with public discourse. A Structural Plan for Imitation: Engines of Differentiation gives us a closer look at discrepancies between the promises made about artificial intelligence and the realities of how it materially acts on the world. With the current emphasis on methodologies that rely heavily on models, it’s crucial to consider the assumptions, power dynamics, and ideologies embedded in AI systems. The project delves into these ideas through models’ structuring of relationships between visual perception, real-world phenomena, and the traditional systems of value that have culminated in the present pervasiveness of artificial intelligence.

A Structural Plan for Imitation: Engines of Differentiation has been presented internationally in the following contexts:

selected for purchase for the city of Porto's public art collection through the Pláka Acquisition initiative. In that capacity, it will be shown in the Municipal Gallery of Porto in 2026.

Exhibitions

2025 International Conference on Computation, Communication, Aesthetics & X (xCoAx), Dundee University, Scotland

2025 Parameter Generative Arts Fair, Kino Šiška, Ljubljana, Slovenia

Conference Paper Presentation

2025/07/10 A Structural Plan for Imitation: Engines of Differentiation, xCoAx 2025, V&A Dundee

Publication

Rosemary Lee. “A Structural Plan for Imitation”. In xCoAx 2025 Proceedings of the 13th Conference on Computation, Communication, Aesthetics & X, 287–289. 2025.

2025.xcoax.org/xcoax2025.pdf

ISBN 978-989-9279-05-6

DOI 10.34626/2025_xcoax

#################################

In Dialogue: Artist Talk with NeMe:

NeMe: Can you provide a brief outline of your formative ideas generating A Structural Plan for Imitation: Engines of Differentiation?

Rosemary Lee: Over the course of developing the project, Alexia Achilleos and I discussed a number of different ideas that all come together in how AI is affecting visual culture currently. We started out discussing how Achille Mbembe’s concept of necropolitics feeds into Dan McQuillan’s necroplitics of AI, and how that ends up informing the way that these systems churn through data, labour, energy, and culture. We also looked at the contrast in the way that tech CEOs discuss the technology, in comparison to those working on AI ethics such as Timnit Gebru or theorists such as Matteo Pasquinelli, who has recently addressed the social dimensions of this shift towards pervasive AI.

NeMe: Can you explain the artwork’s encapsulation of Engines of Differentiation? How does this manifest for the viewer’s experience?

Rosemary Lee: The subtitle Engines of Differentiation addresses the logic of division embedded in AI systems. This theme is discussed throughout the textual element of the work, where we look at how machine learning is premised on enacting difference where it is applied. This idea is also explored in the visuals through our examination of training data, which is used to teach machine learning systems to differentiate and reproduce various aspects of input data. The aspect of differentiation extends from the simple technical side of it becoming more difficult to discern generated from non-generated content all the way to more political dimensions such as the power dynamics that AI plays a part in.

NeMe: Do the texts you reference in the videos act as a point of reference regarding a ‘structure’ or ‘imitation’?

Rosemary Lee: The title of the work comes from an amalgamation of various different ways of defining what a model is. With the rise of AI, we are more and more often encountering the effects of how algorithmic models structure the world based on replicating statistical patterns from the past.

NeMe: The installation consists of two interdependent elements 1.) the structures and 2.) the video projections yet provide their own unique spectator engagement. Is this intentional on your part and if yes, can you explain the motivation for this design?

Rosemary Lee: The installation sets up a relationship so that the video projections are distorted by the apparatuses. This project explores how we can think about AI differently through combining aesthetic experience with conceptual inquiry.

NeMe: Please discuss how your collaboration inspired a focus, considerations, which were additional or enhancements to your own individual practice.

Alexia Achilleos: Despite our differing theoretical frameworks, Rosemary and I are both interested in the historical patterns found within the AI ecosystem, as well technology itself. We both recognise that AI does not exist in a vacuum – instead, it is embedded with power, politics, and culture. I investigate AI from postcolonial, decolonial, and intersectional feminist perspectives, focusing on semi-peripheral spaces like Cyprus—an ex-colony and hybrid space between East and West. Depending on the context, Cyprus is seen either as part of the birth of Western civilisation or as an oriental Other, a status that shifts according to what is convenient at the time by those in power.

Rosemary asked me to contribute to the collaboration through such perspectives. These included how colonial-era legacies continue to be embedded in AI, neo-colonial processes perpetuated by the AI industry, the necropolitical use of AI by those in power, which follows colonial patterns, but also decolonial approaches that resist colonial AI.

NeMe: With the recent expansion and developments of AI technologies, do you think it is important for more artists to embrace the medium or do you see any issues that artists should be made aware of before they decide?

Rosemary Lee: No, I don’t think it’s necessary or even advisable for artists to embrace AI. What is important is for artists to respond to the context they work within and that increasingly includes a need to be fluent in developments such as AI becoming more pervasive and accessible.

Alexia Achilleos: AI is a top-down technology, and it is crucial that artists approach the technology in a critical manner. Newer models demand more computing power and data to train and operate, so it is becoming increasingly difficult for an individual artist to custom-train their own model. We must be aware of the power asymmetries behind such processes; therefore I believe that there needs to be a very good reason for an artist to use a pre-trained model in their work. Despite this, artists have a role in communicating the reality behind the AI hype to the wider community, rather than fuelling the hype.

NeMe: How and why will this artwork be relevant to the wider media arts community but also, to the wider public?

Rosemary Lee: This project addresses some of the discrepancies we encounter in the increasing presence of AI in visual culture. Beyond the explorations that many artists have been doing on this topic, we’re starting to see generative systems become mainstream, meaning that they affect us on a much larger scale and also in ways that are difficult to understand or remediate. As a result, the ways in which AI affects visual media are more and more relevant to contexts beyond art and technology, specifically. In our collaboration on this topic, Alexia and I tried to problematise what we see as some of the core issues in this area and to discuss how complex an issue it is. Some of the earlier waves of interest in AI relative to media art have either made the mistake of treating it in fairly black and white terms, or as merely a tool, without the nuance of considering its philosophical, historical, and political dimensions. With this project, we tried to demonstrate how many divisive aspects actually come together in AI systems, which is something that is important as we look towards what the future may hold.